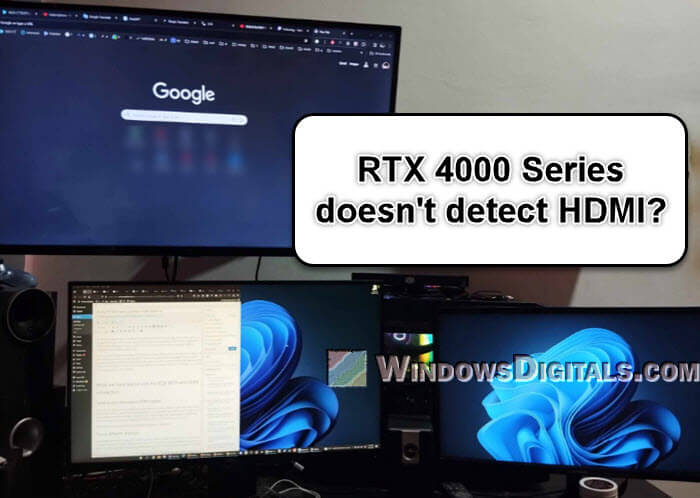

We recently upgraded one of our office computers with a NVIDIA GeForce RTX 4070, moving up from an old RTX 2070 Super. The computer was set up to use four displays with the 2070: three via DisplayPort (main monitor, secondary monitor, and an Oculus Quest) and the fourth being a standard 1080p TV connected with an HDMI-to-HDMI cable.

But after installing the new RTX 4070, Windows stopped detecting the HDMI connection, although all other displays connected through DisplayPort continued to work perfectly. We think this issue might be common with other RTX 4000 series graphics cards, like the RTX 4060, 4080, and 4090. If you’re facing this issue too, this guide should be able to give you some clues as we talk about the problem and show you how we solved this odd and unique issue.

Page Contents

What we tested with the RTX 4070 and HDMI connection

Test with different HDMI cables

One of the very first things we did to figure out the problem was to try a different HDMI cable. HDMI cables can sometimes be the troublemaker in connectivity issues, so we wanted to check that off the list. We swapped out the original cable for another one that we knew was working well with other devices. Sadly, this didn’t solve the problem, as Windows still didn’t detect the HDMI connection to the TV.

Linked issue: Second Monitor Not Detected After Upgrading to Windows 11

Try different displays

Next, we decided to check the RTX 4070’s HDMI port with a different display. Instead of the TV, we hooked up a standard computer monitor using the HDMI cable. This was to see if the problem was just with the TV or a wider issue with the HDMI output. Sadly, we ran into the same problem: the display worked fine during the boot process but went black as soon as Windows started loading.

Switch ports

We also tried changing the ports used for the DisplayPort and HDMI connections. We thought there might be a conflict or priority issue with how the graphics card was managing the different outputs. Unfortunately, this didn’t work either. The HDMI-connected display stayed undetected by Windows, while the DisplayPort-connected monitors worked fine.

These tests helped us conclude that the issue wasn’t with the cable, the TV, or the specific ports on the graphics card. Instead, it seemed to be about how the graphics card was interacting with Windows once the operating system started loading.

Interesting read: Is it bad to have monitors with different refresh rates?

What we noticed during our tests

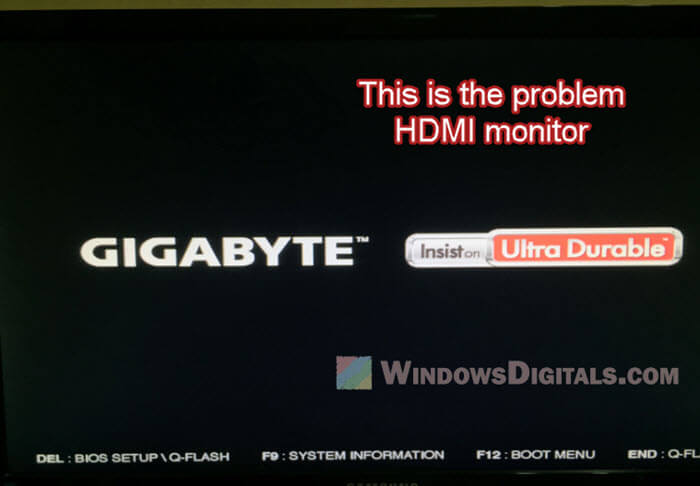

Behavior during boot process

A quite important thing we noticed was the behavior of the HDMI-connected display during the boot process. When the computer was turned on and only the HDMI-connected display was hooked up (with all other DisplayPort displays disconnected), the display would show the motherboard’s logo and the initial boot screens. This showed that the graphics card could send out a signal through the HDMI port basically.

Related problem: Windows 11 Not Detecting USB-C Monitors, Why?

Black screen when Windows starts

However, as soon as Windows began to load, the HDMI-connected display would go black. This sudden loss of signal suggested that the problem wasn’t with the hardware itself but rather with how the graphics card’s drivers were interacting with Windows. It seemed that the drivers were somehow disabling or not properly initializing the HDMI output once the operating system took over from the basic input/output system (BIOS) or Unified Extensible Firmware Interface (UEFI).

Based on these observations, we figured out that the problem was likely related to the graphics card drivers. This was a big breakthrough, as it meant that the issue could potentially be fixed through software updates rather than having to replace or fix any hardware parts. With this in mind, we moved on to test different driver-related fixes to see if we could get the HDMI-connected display working again.

Might be useful: How to Tell If It’s Your Monitor or GPU That Is Dying

What actually worked to fix the issue

Roll back the driver

Our first try at fixing the issue was to roll back the driver for the RTX 4070. We thought a recent driver update might have caused a compatibility issue with our HDMI setup. To test this, we went back to an older version of the driver that we knew worked well with our system before the upgrade. Luckily, this change fixed the problem. Windows can now detect the HDMI-connected display again.

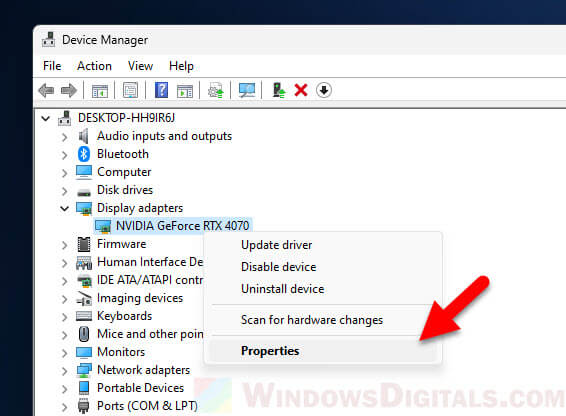

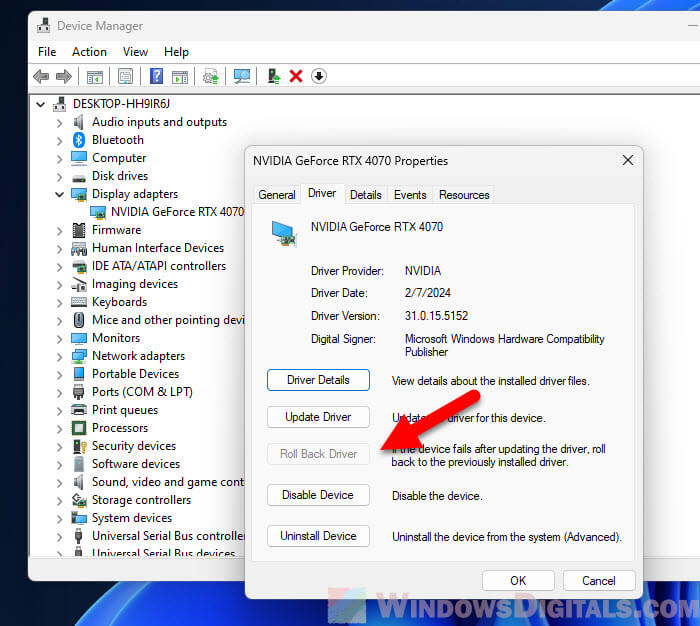

- Right-click on the Start menu and select “Device Manager.”

- Expand the “Display adapters” section to find the NVIDIA GeForce RTX 4070.

- Right-click on the RTX 4070 and select “Properties.”

- In the Properties window, switch to the “Driver” tab.

- Click on the “Roll Back Driver” button. If this button is grayed out, it means there’s no older driver to go back to. In my case, I had already manually removed the old driver, so the option in the screenshot is grayed out for me.

- You may be asked to give a reason for rolling back the driver. Pick the most fitting option and proceed.

- After the rollback is done, restart your computer to make sure the changes take effect.

Pro tip: Using Onboard Graphics And Graphics Card Simultaneously

Choose “Custom installation”

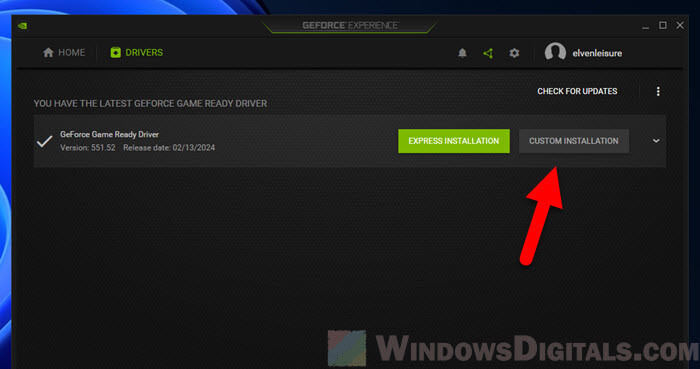

Although rolling back the driver was a temporary fix, we weren’t really happy with using an outdated driver for our new RTX 4070. The problem doesn’t justify us not being able to use the latest drivers. The bug fixes and performance improvements from these new drivers are important and we just can’t ignore them. Thus, we decided to try updating the driver again, but this time we chose to install it differently.

When we update the driver through NVIDIA GeForce Experience, instead of just clicking the “Express installation” like we normally would, we went for the “Custom installation” option. The custom installation will have a specific option called “Clean installation” which will remove any leftovers of the previous drivers first before installing the new one. And this magically solved the issue for us while letting us update the drivers.

Also, we chose to not install the NVIDIA HD Audio driver, as we didn’t use this feature. We’re not sure if this also contributed to the fix, but you can try it too if you don’t use the audio from your GPU.

The result

After doing the custom driver installation with the clean installation option, we rebooted our system. We were happy to see that Windows successfully detected the HDMI-connected display, and all four monitors were working as they should now. This solution let us use the latest driver for our RTX 4070 without messing up our multi-display setup.